Mira helps you analyze location-based segments across a variety of KPIs.

How we work with you

Attribution tests are typically very low touch; we help configure and execute the tests, and all the heavy lifting happens on the backend.

-

1

Design an experiment

We help you configure a control and test group (e.g. unexposed vs. exposed consumers) based on what you are setting out to prove.

-

2

Measure KPIs

Track a variety of conversion events, including: TV tune-in, walk-in conversions, brand recall, app installs, web conversions, and more.

-

3

Generate reports

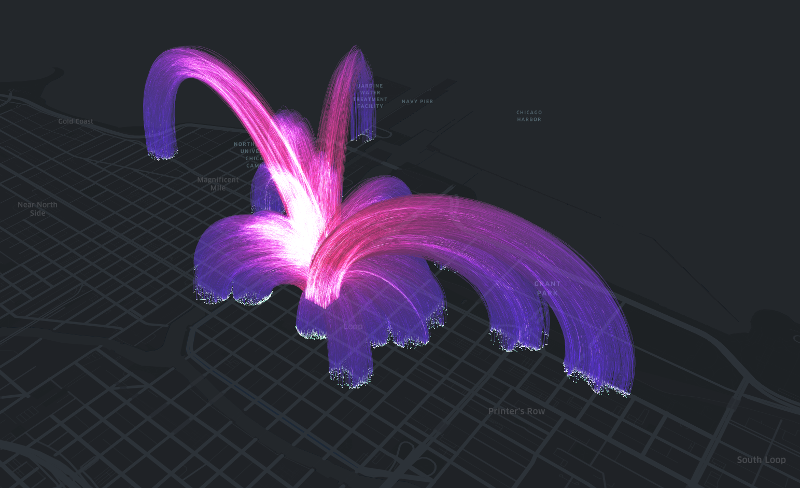

We package results in beautiful hosted or white-labeled reports and visiualizations.

Designing an experiment

Attribution tests try to detect whether a campaign has an effect on a particular KPI. There are a few steps we take to ensure a sound result.

Creating a control

In the case of out-of-home, the control group should exclude any consumers exposed to a campaign. Additionally, the control should be as similar as possible to the test group to lessen the effect of latent (unobserved) variables. We construct the control to mirror the distribution of the test group along various attributes, such as age, HHI, location, and others.

Selecting a KPI

If a KPI has a very low conversion rate in general, it is harder to detect lift. Thus, we must make reasonable decisions when choosing the conversion environment in which the test will be performed.

Ensuring a large sample

We want to make sure we have enough observations to validated whatever result we observe. Before we run a test, we'll analyze how many observations we have had historically to try to assess the test's feasibility.

Conversion Environments

Measure the effect of your campaigns on a variety of KPIs

TV Tune-In

Measure how many devices in each group later tuned in to a TV show or channel. This can be done for a particular episode or a series, and can also factor in DVR viewing.

NewWalk-In Conversion

Measure how many devices in each group were later observed at one or many target locations. For retail, this can also be tied to credit card purchases through partner integrations.

Web Conversion

Measure how many devices in each group later visited a web landing page or performed some action (such as sign-up, purchase, etc). This is done through the placement of a web pixel.

In-App Behavior

Measure in-app metrics such as installs or first order/purchase against devices in either group. Achieved through integrations with TUNE, Kochava, AppsFlyer, and several other SDKs.

Brand Recall

Measure how well consumers in either group were able to recall a particular brand or advertisement. Achieved by delivering surveys directly to mobile devices.

Frequently Asked Questions

How do you know someone was exposed to OOH creative?

We consider a consumer exposed if they had the opportunity to view creative. This takes into account the bearing, distance, and field of view from which one can see a unit, as well as a user's previous location, and in digital, the time an ad rolled.

What type of test do you run?

We are trying to demonstrate that one group (exposed) has a higher conversion rate than another (unexposed), so we typically run a power analysis of two independent proportions.

How many impressions are needed to ensure a statistically significant result?

Whether or not a result is statistically significant depends partly on what that result is, not just the sample size. Therefore, it is impossible to quote a certain number of observations before a test in run that guarantees a result will be significant, because we have yet to observe the conversion rates. We attempt to estimate a sample size using conversion rates and lifts from similar campaigns we have run in the past as a guide.

Want to learn more?

Email info@mira.co for general requests or sales@mira.co for sales requests.